Intelligent Material Handling System

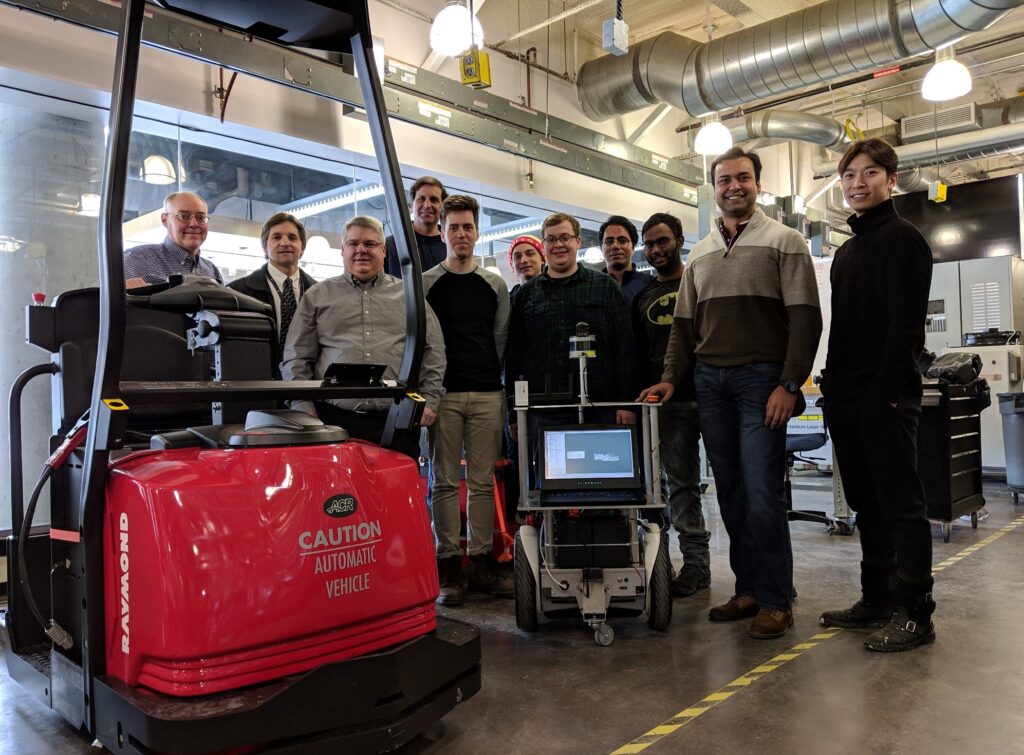

The Intelligent Material Handling System (iMHS) is a multidisciplinary research project supported by the Toyota Material Handling North America University Research Program. The project addresses many active areas of research in the material handling industry including autonomous inventory management, robust indoor robot localization, distributed cybersecurity, and intelligent collaborative task dispatching. My main areas of focus included sensor integration, autonomous platform bring-up, and deep learning research.

During my two years on the project I served as the lead software developer and group manager. I coordinated student presentations for internal and customer demonstrations. Integration of any new sensor was either performed or vetted by me. I developed the basic robot movement capabilities and performed complete ROS navigation stack bringup. I also wrote new data collection routines for aggregating multi-modal localization data and coordinated many collection sessions over several semesters. This work laid the groundwork for several machine intelligence-based localization publications which are listed in my publications section. In addition to research and integration, I also managed all the robot laptops and lab workstations which involved Tensorflow/PyTorch/Caffe/ROS Melodic environment setup and CUDA device driver installations.

Development first began on a small differential-drive robot platform which was based on a Ninebot commuter device. This platform serves as a rapid integration and testing system for new technologies. When we want to try out a new sensor or integrate new navigation functionality, these small platforms can be quickly deployed for experimentation. Four of these platforms have been constructed for multi-agent testing. My contributions to the small platform include complete ROS navigation bringup and integration of many different sensors including a Velodyne VLP-16 3D LiDAR, a Kodak omnidirectional camera, multiple Intel Realsense D435i depth cameras, an Intel Realsense T265 tracking camera, Decawave Ultra-wideband indoor positioning beacons, TP-Link 60 GHz wireless routers, and a Hokuyo 2D LiDAR. I also wrote a custom base controller node for handling odometry and kinematics. This is the layer that translates the higher-level navigation movement commands into independent wheel velocities that the platform can achieve. The small robot platform was the testbed for many localization papers.

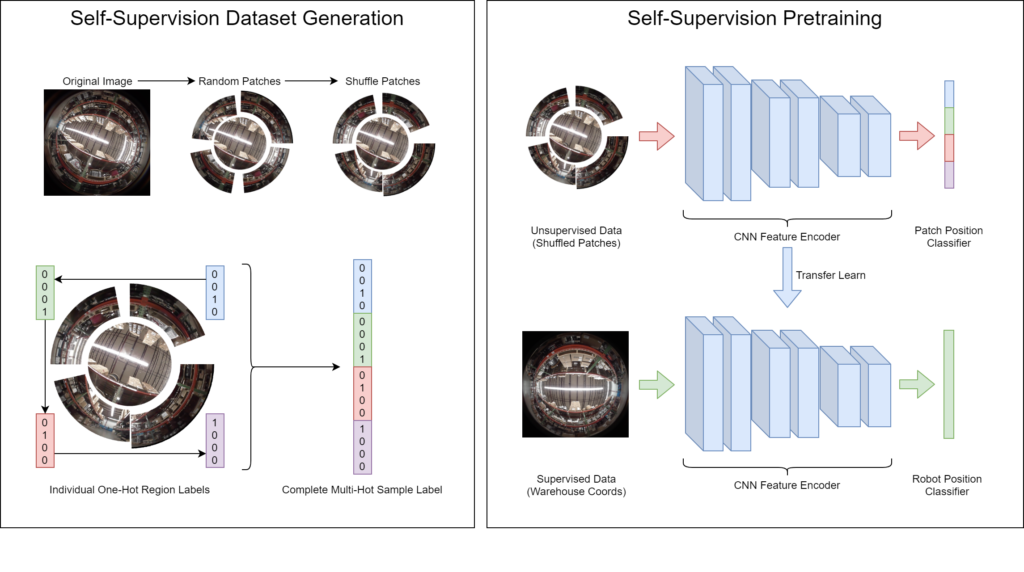

There are several applications of artificial intelligence and deep learning in the project. Reinforcement learning for intelligent task dispatching, object detection with the latest bounding box classifiers, and deep learning-based robot localization are some of the active areas of research. I was the lead on the localization research effort for these autonomous warehouse robots. My work includes classifier and regressor localization networks that utilize data from a variety of sensors including an omnidirectional camera, a 3D LiDAR, 60 GHz wireless routers, and Ultra-wideband beacons. Additionally, my most recent work has involved applying self-supervised learning techniques to augment the current position models. By utilizing easily collectible unlabeled data, deep-learning models can be predisposed to useful features for omnidirectional camera tasks. I designed and implemented a novel radial patch pretext task for omnidirectional camera training pipelines. An illustration of this development is shown in the figure above. More information about this work and all related iMHS publications can be found through my publications section. These experiments and models were implemented using Tensorflow and PyTorch.