A Multidisciplinary Capstone Project

The RIT Autonomous People Mover is an ongoing multidisciplinary project to build an autonomous golf cart platform. The scope of our work included upgrading wiring harnesses, maintaining golf cart drive components, introducing a new deep learning pipeline for visual terrain identification, and fusion of several perception systems for autonomous wandering. My role on the senior design team was lead software engineer and my main contributions were the introduction of computer vision for hazardous terrain detection and perception sensor fusion.

The Autonomous People Mover is an ongoing senior design project with new teams taking over every year. Our team was the fifth team to work on the cart and our customer-given goal was autonomous wandering in RIT’s Sentinel Loop. When we began work on the project the golf cart was in great electrical shape with very few wiring and power concerns. Much of the basic ROS navigation framework was already configured by previous groups and provided a great starting point for us to learn.

The weakest points of the platform we inherited were the poor outdoor localization performance and weak obstacle detection capabilities. Previous groups had been given the task of implementing full autonomy with point-to-point navigation functionality. While the previous groups were successful, the previously mentioned components required more attention.

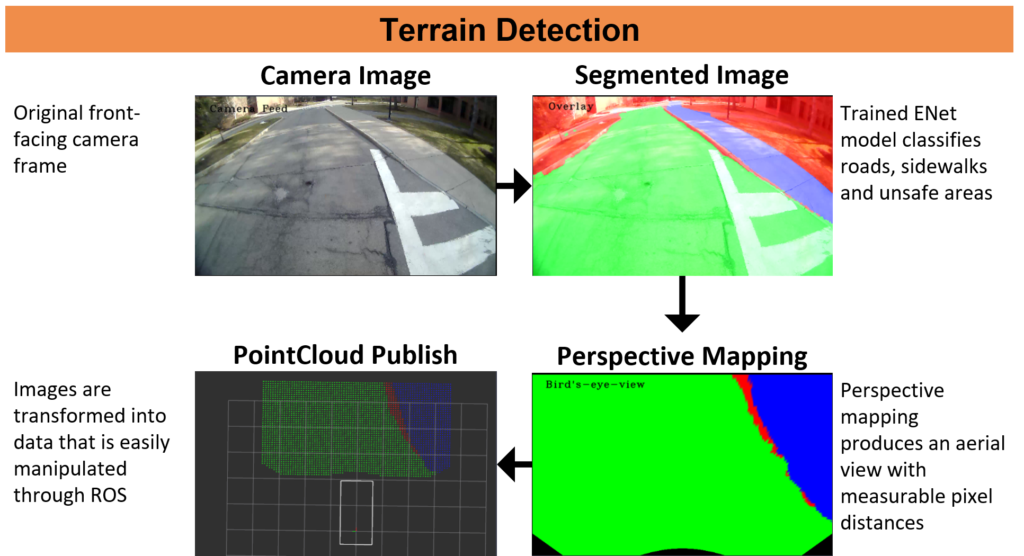

My primary contribution to the project was a new computer vision pipeline for intelligent terrain detection. Between the Velodyne VLP-16 3D and the single channel Hokuyo LiDARs mounted on the golf cart general obstacle detection performs well. Small objects on the ground however would often be undetected due to the relatively low LiDAR sensor resolution. The most prominent small obstacle in our test environment were the small sidewalk curbs bordering the road. These curbs were often not perceptible by either LiDAR and the golf cart would be permitted to drive onto them.

To alleviate this issue I implemented a new computer vision pipeline for visually detecting hazardous terrain and boundaries between drive-able regions. This was achieved using a Caffe implementation of the E-Net deep learning image segmentation model. This model is capable of assigning a class label to every pixel in an image. To adapt this model to terrain classification on RIT’s pathways, I implemented data collection and annotation routines for new data gathered on campus. I coordinated with our customer to hire additional students for annotating new images. A total of around 2300 images were gathered for training the image segmentation network.

After segmentation is performed by the model on the captured image, inverse perspective mapping is performed to transform the image into a birds-eye-view perspective. This homogeneous transform allows each pixel in the resulting image to be mapped in front of the golf cart. Intrinsic and extrinsic calibration of the camera was required for this step. The resulting data is published as a ROS pointcloud message for integration with the existing obstacle occupancy grid. A live model of the network running in inference mode on the golf cart is shown above.

With the successful integration of my new computer vision pipeline for terrain identification, our project iteration was able to successfully identify sidewalk curbs and road boundaries in situations where the LiDAR sensors had previously failed.